I just finished uploading the initial beta version of my latest project FMStudio to github.

What is FMStudio you ask?

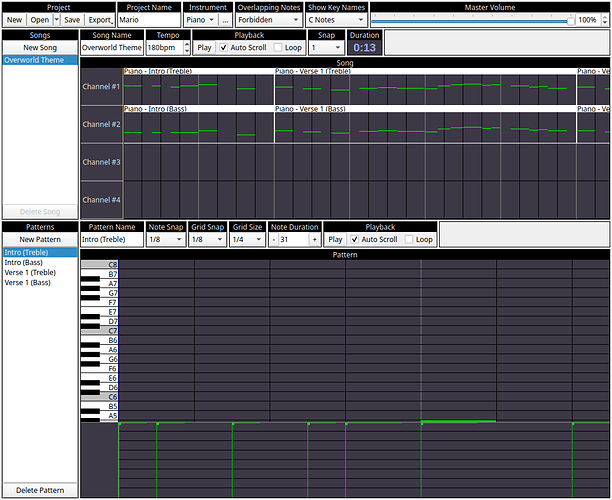

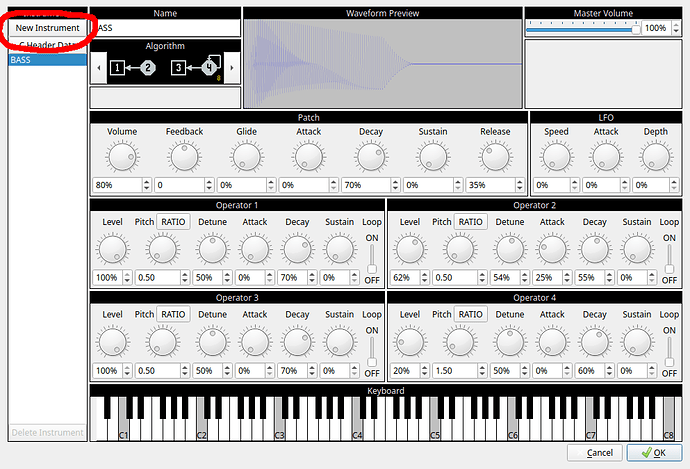

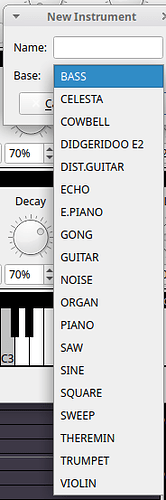

FMStudio was inspired by @jpfli’s awesome FMSynth library. It allows you to customize the instruments with a nice GUI interface and can create entire songs by breaking songs up into reusable patterns. Currently it is able to export FMSynth Patches as C Header code useable with FMSynth on HW and can export songs as RAW audio. Later I will be working on exporting songs in a C Header format that is similar to SimpleTune but slightly more complex and will allow including songs directly in your code using minimal space. The beauty is if you want to create more complex pieces (like having more than one note playing at a time within a single pattern, or your game is too resource intensive to generate the music on the fly) you can always just export the songs as RAW audio (8000hz 8-bit unsigned pcm) to be streamed from SD.

The interface should be fairly easy to learn as you simply move the cursor around to place notes/patterns (it will show a semi-transparent copy of where you’ll be placing if the placement is valid). You can adjust the note duration, duration snapping, grid snapping, and all sorts of other components. FMStudio also supports adjusting each note’s velocity (between 0 and 127) which effects the note’s overall volume (you can create some neat effects using this). There’s also a virtual piano keyboard that you can click on to hear individual notes based on the selected instrument.

Patterns are not tied to specific instruments either allowing you to play the same melody across different instruments for added effect.

There is also an “Overlapping Notes” section where you can tell it to prevent overlapping notes (it won’t allow you to place them), highlight any overlapping sections red, or allow overlapping notes (you can place them and it won’t highlight them).

Projects are stored in FMX files (plain text JSON file) so that later when exporting to C Header all songs will share the same set of instruments instead of providing the instruments used in that song (thus having duplicate instrument data).

Curious to see what others are able to make with this (both instruments and songs). I’ve included a mario.fmx project which has the first few measures from the overworld theme to demonstrate some features. Also there’s an experiments.fmx which contains my current experimental songs/instruments/patterns (I particularly like my Heavy Bass instrument I made).

Github

Binaries: v0.2.1

- Windows (Simply extract the zip file anywhere you’d like).

- Linux (Simply extract the tarball and run FMStudio).

- MacOS (Simply open the DMG and drag the AppBundle into the Applications or run directly from the DMG).

Trying to create a Mac binary but currently clang is having issues with the FixedPoint library so hopefully I can sort that out at some point. Linux binaries would also be nice but linux is notorious for not being very binary distribution friendly.