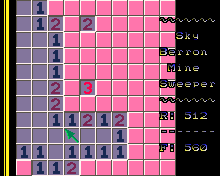

SkyBerron Minesweeper [WIP]

SkyBerron Minesweeper.bin (37.4 KB)

I love MineSweeper!

Same here this my usurp 2048 on mine.

Without meaning to sound like a broken record, will there be source code available?

I’m still working on the one-line buffer graphics engine: functionality, interface, performance. The game is not finished yet. The Pico 8 version I’m coding in parallel is pretty laggy and unfinished. The source code is a mess and needs refactoring. Once those tasks are done and minimum quality is met, everything will be published. Meanwhile, I’m switching to a new game prototype to stress test the engine under different game requirements.

Don’t worry. Nobody’s going to read it unless there’s a bug that seems “easy” to fix.

I’d read it. For me reading the source is just as much fun as playing the games, if not more so in some cases.

(And if someone says “it’s not finished” then I can usually resist the urge to start suggesting improvements. Usually. Unless I’m particularly code starved. :P.)

There is no reason for hiding the source until it’s finished, but that’s just my suggestion of course. Don’t worry, no one expects a work in progress code to be even close to perfect.

You are making two versions of the same game for two different platforms? In this case I’d suggest you make just one game that runs on both platforms, this is a great opportunity to practice this – there is no reason to code twice the same thing. Put the shared code into a core module and make a small front end for each platform. Or is this what you’re doing already?

I’m better at coding in LUA. It’s a fact I haven’t used C++ for many years. I’m very lazy at typing and Pico 8 programs (mostly LUA) tend to be more compact: I have written many code snippets, even simple games, that fit in 2 tweets length. And I feel more confident debugging there… maybe trial&error cycles are shorter. So, currently I start coding in LUA (code and graphics) and when basic logic begins to work, it’s time to port. At one point, I get both C++ and LUA versions working.

My code is ugly because of direct porting and because I’m bypassing most C++ “advanced” features as my brain doesn’t like them. Now I’m setting the focus on the graphics engine, not the games themselves. Anyway, I’d like to remake some of the games I played when I was a child (and not so child). Awww, how many hours wasted playing MineSweeper…

You’re not alone, just use C

Minesweeper is one of my favorites too.

Lua’s also one of my favourite languages.

(Though it’s “Lua”, not “LUA”. It’s not an acronym, it’s Portuguese for “Moon”.)

I hope to some day get Lua working on Pokitto,

but the source code takes quite a bit of digging through.

What do you consider advanced?

Pointers? Types? Functions? Classes?

I consider “advanced” anything beyond old good C: i.e. OOP, classes, templates, virtual classes and functions, operator overloading, runtime type check, exceptions, getter/setters, and many other bells and whistles C++ offers and I don’t know of.

Pointers? I use them to pass arrays to functions, also useful to speed up memory transfers. Definitely classic, not advanced. I had a lot of fun (null ptr assigments and seg faults) using them in the past not to fear them.

Types? I use classic types, plus bool. Also some structs to pack object data.

Functions? I try to keep as few as possible plus some small helper functions declared inline. I use references for input/output arguments and declare variables near the point they get used (using C that was not always possible).

Classes? I use them, but no inheritance nor polymorphism nor virtual classes nor operator overloading nor derivation. No math/algebra classes, thank you. Though I admit I use some member functions and encapsulation helps a lot. I’d like to learn to use entity component system in games.

Also: I don’t allocate nor free dynamic memory inside main game loop (all new/delete in the init function, executed before game loop), I rely a lot on fixed size arrays, I avoid global variables. For small projects, I use as few src and header files as possible. I usually stick to classic readable C, write explicit casts and use parentheses in excess to make precedence clear.

I love two letter variable names (sometimes numbered) inside functions because I’m too lazy. A short meaningless name means they’re not worth the effort of naming them properly. No C++ std lib, only C standard libs, sorry. For text, I use fixed char buffers and zero ended strings, although I hate them and prefer String class whenever dyn mem isn’t an issue. I still use classic enums to name states and some #defines for constant, predefined values and enabling debugging code.

Result: ugly code. If I were you, I would not peek inside code I have written in a hurry, which happens to be most of the code I write.

As I say, you can simply use C – actually from what you’re writing it seems like you prefer writing C and you just feel bad it. I’d say don’t. We greatly disagree with @Pharap on this, but I think, and am not alone, that you don’t need C++ and OOP at all, and especially for these small programs they’re a super overkill. I used to write C++ for years but I only use C now because I’ve found it’s simply better. I would only recommend using C++/OOP here if you’re specifically aiming to learn it this way.

That’s for really complex games, even many much bigger PC games don’t benefit from these design patterns, this only becomes useful in really big projects and engines (I remember I’ve been once collaborating on this big C++ engine for a PC game with a small team and we were considering entity/component, but still dropped it because of adding too much complexity). In this case it’s best to try to contribute to some existing big FOSS games. Again, if you just aim to learn this and don’t care if the design is really helpful, then by all means do it.

I think on Pokitto we prefer not allocating on heap, but correct me if I’m wrong. Basically everyone uses fixed size arrays here and allocation just on stack.

Global variables aren’t such evil as they teach in schools, but they have to be used with care – always consider every specific case, global state is sometimes the most elegant solution.

I think what you call ugly is simply preferring C before C++, which I do too and I proudly present my code, so don’t be ashamed, I’m already embarrassing myself this way.

Quite a mixed list of things.

Personally I don’t even consider all those things in the same category.

Classes are cheap and not particularly complex.

(I would argue that if C had given its structs member functions then it would have been enough to have been classed as OOP.)

Operator overloading is arguably even simpler than classes.

RTTI, exceptions and virtual functions have the potential to be costly so they are certainly more contentious issues, and I would agree that they are more intermediate.

I would also say that even desktop programs should avoid RTTI when possible,

not so much for the added cost (though that is a factor) but because it often leads to poor design - virtual functions are usually a better alternative.

Templates are probably the most advanced thing on that list,

but only because of what they are capable of.

Simpler uses of templates are very simple to understand.

E.g. std::min and std::max, std::array (which is no more expensive than a regular array), std::vector

The difficulty comes with things like template specialisation and non-type template paramters,

because those are what give templates their turing completeness.

If it weren’t for those then C++'s templates would probably be no more powerful than C# or Java’s generics.

That’s good.

(I hasten to point out that C doesn’t have references.)

Inheritance generally doesn’t have a notable memory cost (compared to declaring an equivalent class that doesn’t use inheritance), so I find that somewhat strange.

I can understand not wanting to use virtual functions to a degree because they do have a cost.

Though that cost is relative. On the Pokitto the cost isn’t too bad in my experience.

On something running an AVR chip the cost is more notable (I completely avoid virtual functions on the Arduboy).

However, not using inheritance and polymorphism does not make the use of classes non-OOP.

Simply having member variables and member functions is enough to satisfy the most basic definition of OOP.

Inheritance and subtype polymorphism are not inherantly required.

I don’t understand why operator overloading would be a contentious issue.

Operators are just functions, operator overloading is no more of an issue than function overloading.

Writing a + b with an overloaded operator costs no more than using add(a, b).

I’m not sure what you mean by derivation.

I’m not sure what the problem is here.

If you’re doing that then you probably don’t actually need the new or delete and could just allocate everything statically.

That requires virtual functions, and possibly runtime type information.

http://gameprogrammingpatterns.com/component.html

In general entity component systems are better suited to desktop games because there is an overhead to them.

There is a tipping point at which the benefits outweigh the costs,

and I doubt many Pokitto games would reach that point.

That’s not necssarily a bad thing, particularly on an embedded system.

That’s also a good thing, assuming that you’re relying on member variables instead.

I slightly disagree with this.

I’d rather have lots of small files than one or two really long files.

On the Pokitto there’s not much choice there because the Pokitto library is implemented as a series of C++ classes.

(Java and Python are alternative options.)

Explicit casting is good, though C++-style casting is better than C casting because it helps to avoid mistakes (e.g. casting away const) and highlight the more ‘dangerous’ conversions (e.g. reinterpret_cast is more dangerous than static_cast).

I consider that a good thing, so I do that as well.

I quite disagree with this.

I remember when I was first learning to program and I’d come across code using single-letter variable names and I would really struggle to understand what it was doing.

Finding code that used meaningful names made the learning process a lot easier.

Consequently I try to always use meaningful names and try to make my code as self-explanatory as possible, even down to using index instead of i when indexing arrays.

I strive to write the kind of code that would have helped my younger self.

That part I find particularly strange.

The C++ standard library can outperform the C standard library in some cases.

E.g. std::sort outperforms the old-fashioned qsort because it can be better optimised and doesn’t rely on function pointers.

There’s nothing inherantly wrong with that,

particularly in an environment with limited memory.

Using macros to enable and disable debug code (i.e. ‘conditional compilation’) is fine,

but using macros for constants should generally be avoided.

‘classic’ enums are better for states than using macros and integers,

but modern scoped enums are better than both because they’re more type safe.

C code doesn’t have to be ugly.

There is a limit to how ‘nice’ C code can be because of some of its imposed restrictions, but it is possible (in my opinion) to write ‘nice’ C code.

In my experience there’s more ‘ugly’ C code in the world than there is ‘nice’ C code.

However, I’d say that’s true for other languages too, even C++.

(I’ve found quite a lot of projects on GitHub that have what I’d call ‘ugly’ C++ code.)

@drummyfish I won’t reply to all of what you said because that will just derail things even more, but I will say a few things.

Firstly, C++ and OOP are different things, neither implies the other.

If I wrote a program that didn’t use any classes but used function overloading or declared a variable half way through a block scope, that program would still be C++ rather than C.

Secondly, unless something’s changed that I’m not aware of,

you can’t actually program the Pokitto (solely) in C if you also want to use the Pokitto library (without writing a wrapper for it).

To date nobody has written a Pokitto program in pure C as far as I’m aware.

That may change when FManga’s recent minimal library project is finished.

It’s kind of half and half.

Personally I think the Pokitto has enough RAM that you can usually get away with using a bit of dynamic allocation.

In fact, every micropython program uses dynamic memory implicitly,

so those are a good example of how much dynamic allocation you can get away with.

However, I would advise restricting dynamic memory allocation where possible, even on desktop.

The extent that you should go to to avoid it depends on the circumstances,

but as a general rule if you know you can avoid using it (particularly if avoiding it wouldn’t take much effort) then you should avoid it.

Aside from one or two things, the code for this isn’t too bad overall.

My biggest complaint would be that some things aren’t being qualified with std:: that should be (e.g. size_t and memcpy).

(Although if you’re trying to be pre-C++11 compliant, you might want to get rid of some of tha autos that are dotted around.

If you’re not then you could be using more C++11 features, e.g. using instead of typedef.)

Thanks, @Pharap. I mostly agree with everything you have pointed out. I only disagree on always using detailed names even for unimportant vars and relying too much on OOP features as I perceive that you lose control/vision of what’s really going on under the hood. Imho, excessive OOP leads to slow bloated software, but I can’t probe that sentence. Obviously, in a production/enterprise environment or a collaborative team work, I would have to stick to the “rules” and make crystal clear code, and that would mean typing too much and too much time invested, although as you pointed out, code is self documenting itself this way. Documenting code is so boring…

#metoo, my rules are these: if a variable has wide scope, it should be well named as to make it always clear what’s in it, while in short scope vars the name can be simpler/shorter (which has advantages) because you can see what’s in it. E.g. if you have a global variable, you should name it something like g_timeFromStartMs, while a local variable holding the same value inside a very short function can be named simply t.

I won’t drag this on too much longer, but as you raised some other points:

For the most part I don’t find that to be the case.

For a class with no custom constructors and no virtual functions the semantics are the same as passing around the kind of structs C uses, which is more or less just copying bytes around.

Having virtual functions adds only a few extra implications.

General implications:

Actually calling a virtual function typically entails:

Where it can get difficult is when the object owns some kind of resource,

(e.g. a file, a dynamically allocated block of memory)

because you then need to know whether the resource is being copied or moved to determine the cost.

But typically the compiler’s quite good at picking the right operation,

and if in doubt you can always force a move with std::move.

(Non-copyable resources are pretty simple to understand because they can only ever be moved.)

And of course, at least you can be assured that there will be no resource leaks,

which saves a lot of headaches in the long run.

(Assuming the class was written properly of course.)

Excessive use of anything with a memory or performance cost can lead to slow software.

Dynamic allocation is often a worse offender anyway.

(Some implementations of malloc/new have to walk a linked list to allocate a block.)

I don’t disagree, I helped document a fair chunk of FemtoLib and I could only keep it up for a day or so before I lost interest.

That’s also the one of the reasons why the Pokitto library isn’t documented better.

(The other being that it was written by so many people and so few comments were left that it’s not always clear what’s going on.)

Here’s a thought for you though: What about your future self?

When I look at something I wrote last year with meaningful variables and a few nice comments, I thank myself for doing so.

When I look at something from before I started doing that,

it takes me ages to unpick what it’s doing and I curse myself several times over in the process.

When I look at something I wrote several years before:

A) if I understand it, I curse myself for the poor implementation.

B) if I don’t understand it, I curse myself for not being able to understand it.

If I need to solve the same problem, either I copy&paste or redo. If I redo, I usually get a slightly better quality and that is how my coding skills improve: reinventing the wheel.

While I don’t disagree about redoing old projects,

good names and comments help to mitigate scenario B.

Finding a poor implementation should probably be celebrated,

because the fact you recognise that it’s poor and realise that you could do better signifies that your skill has improved.

@SkyBerron doing the same for this one

I will make a proper WIP category and reorganize soon