You could always write your programs in assembly,

then you’ll have lots of silent bugs to catch.

Better yet, use butterflies. :P

I don’t disagree, it is unfortunate,

and it’s not even the most awkward part of C++'s IO streams.

However, that’s a limitation of how they’ve chosen to implement the system rather than a limitation of the language.

It would be entirely possible to make something that isn’t stateful.

For example...

template<typename Int>

struct hex_format_t

{

Int value;

};

template<typename Int>

hex_format_t<Int> format_hex(Int value)

{

return { value };

}

// This would either need to be done in a generic way,

// or have a specific implementation for each integer type.

std::ostream & operator <<(std::ostream & stream, hex_format_t<std::uint8_t> & format)

{

constexpr char digits[] { '0', '1', '2', '3', '4', '5', '6', '7', '8', '9', 'A', 'B', 'C', 'D', 'E', 'F', };

stream << digits[(format.value >> 4) & 0xF] << digits[(format.value >> 0) & 0xF];

return stream;

}

Which you’d then use as std::cout << format_hex(42) << '\n';.

(The idea would of course be to make this work for all integer types, not just std::uint8_t.)

That’s a very simple example, it would be possible to create something that can accomodate lots of different formats and even combine them.

And of course you can always create functions to hide the state changing if it’s something you’re going to be doing a lot.

For example...

template<typename Int>

struct format_08X_t

{

Int value;

};

template<typename Int>

format_08X_t<Int> format_08X(Int value)

{

return { value };

}

std::ostream & operator <<(std::ostream & stream, hex_format_t<std::uint8_t> & format)

{

std::iot state(nullptr);

state.copyfmt(stream);

stream << "0x";

stream << std::hex << std::uppercase << std::setw(8) << std::setfill('0');

stream << format.value;

stream.copyfmt(state);

return stream;

}

Then you’d just std::cout << "In hex: " << format_08X(42) << '\n';

There’s probably at least half a dozen other tricks you could do to make the format saving code less obtrusive. (I can think of at least one easy one that would make the 'copyfmtstuff part of the<<` chain.)

It’s probably worth pointing out that in C++20 they’re planning to introduce std::format which is more along the lines of how C#'s string formatting works.

It still has some of the flaws of std::printf but I believe it’s fixed the extensibility part at least.

It will, but that’s a compiler extension, it’s not part of the language.

VC++ will also warn about it, but by rights it should be a hard error, not just a warning.

On the one hand if copying is expensive then resizing the array is expensive, yes.

(Strictly speaking, as of C++11 it’s almost certainly a move rather than a copy, so something like a vector of vectors would be as cheap as doing a pointer copy.)

On the other hand, arrays of pointers really upset the cache,

and on a modern CPU, a lot of the time the cache miss is more expensive than the copy.

That said, it’s not a dichotomy, there are other options.

About std::deque

One decent alternative is to use std::deque which doesn’t do any object copying when it needs more memory.

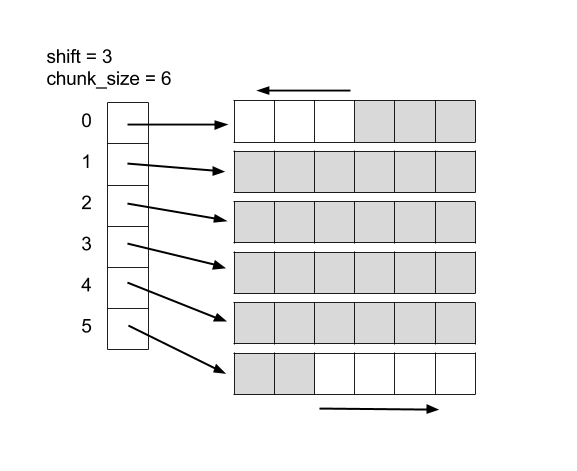

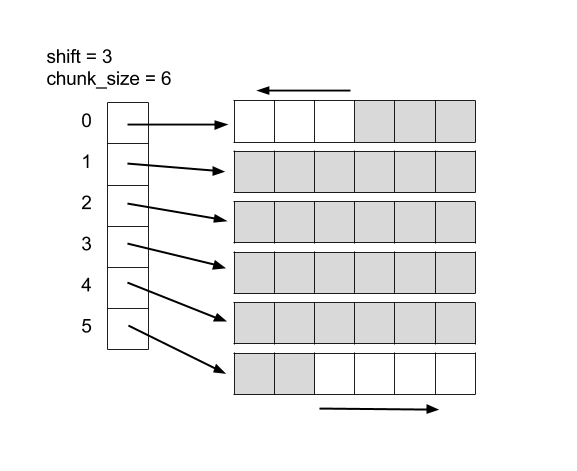

It’s typically implemented as a dynamic array (i.e. like a std::vector) of pointers to fixed-size arrays, like so:

That gets you the best of both worlds - it minimises both cache misses and copying on resizing.

Naturally there are trade offs, but that’s just the nature of programming.

(There’s an interestingset of benchmarks here. Naturally it can’t necessarily be generalised to all systems, but it’s useful nonetheless.)

I beg to differ.

auto charBuffer = std::make_unique<char[]>(1024);

That’s it. No deletion, the heap-allocated buffer deletes itself when the object falls out of scope and the generated code would be as if you’d written:

auto charBuffer = new char[1024];

// ...

delete charBuffer;

But you don’t need to worry about returning early without having deleted the buffer.

In fact, one that people often forget about: if a function called before delete throws an exception, the buffer doesn’t get deleted because delete is never reached.

With std::unique_ptr, even if an exception is thrown the buffer is still deallocated.

I.e. Leak:

auto charBuffer = new char[1024];

throwsAnException();

delete charBuffer;

No leak:

auto charBuffer = std::make_unique<char[]>(1024);

throwsAnException();

I know I’m perfectly capable of handling new and delete because I’ve written code that uses them extensively without leaking anything, but I still use the smart pointers for several reasons:

Reasons...

- There’s no need to write a custom destructor or move assignment operator - the default implementations provided by the compiler will defer to the appropriate functions provided by the smart pointer, which will handle any reference counting and releasing without even having to think abou it

- It’s harder to make a mistake - forgetting to delete is easy, but with smart pointers you don’t have to worry about it.

- The only thing you have to be careful of is to avoid cyclic references and strong references when using

std::shared_ptr, which is why std::weak_ptr exists - to break the cycles and provide a reference that won’t stop the memory being cleaned up.

- For

std::unique_ptr there’s practically no overheads, it’s just generating the code that you’d be writing yourself

Vectors of unique pointers...

Going back to the ‘vector of pointers’ case mentioned earlier,

if std::deque wasn’t suitable, a std::vector of std::unique_ptr would be a better bet than a std::vector of raw pointers because you wouldn’t have to worry about clean-up.

You’re still allowed access to the raw pointer through the use of .get() if you need to hold onto it briefly without taking full ownership of the pointer, so it should still be just as cheap, but you no longer need to worry about deallocating.

I disagree with this particular point, but possibly not in the way you’d expect.

I think everyone who is learning the language, regardless of what they want to do with it, should be taught to use constexpr and const variables for constants.

For Group 2, they probably shouldn’t even be taught about #define in the first place.

That’s a bold claim, but I stand by it.

As you say:

And constexpr variables are far easier to predict.

The value is fixed at compile time, so you’re not going to accidentally insert some code that does extra calculations at runtime.

For example:

#define FACT_4 factorial(4)

constexpr int fact_4 = factorial(4);

Whoever goes around flooding their program with FACT_4 is going to get a nasty surprise at runtime when they discover that FACT_4 isn’t actually a constant.

In my experience most of the people who turn their nose up at constexpr aren’t beginners,

they’re established programmers who think constexpr is just being used because it’s new and fancy.

constexpr is used because it’s predictable, obvious, safe, easy to use and solves a number of issues that macros have.

Also, since you mentioned static - be very careful with using static on global variables.

Generally it’s not what you actually want to be doing.