Looks very good! Which graphics mode you are using? What is FPS in HW?

Looks very nice, would like to see the code, there may be things that can be optimized. Mode7 has already been done, but you could try to take it further and make a small research or even an engine, e.g.:

- Mip mapping – would get rid of aliasing in the distance. It would cost a little memory but not many CPU cycles I think.

- Texture filtering – this might be too slow but I’d like to see linear interpolation so that it’s not “blocky” – no one has tried that so far AFAIK. It would definitely look amazing and you could try to use it only e.g. near the camera.

- Draw also ceiling – this can be done very cheaply, you just mirror the floor on screen. You can also use different texture for the ceiling (use the computed texture coordinate from the floor, sample a different texture and mirror it on screen).

- Possibility to change height above the ground.

- Do something with the texture: use a procedural texture and/or animate it, warp it, draw something over it etc.

- I also wonder if drawing “hills”, according to some heightmap, would be possible.

“No GPU, no FPU, no #madewithunity, and also no #madewithpokittolib. Just good old fashioned hardware coding.”

I used the same linebuffer trick I used in previous directmode tests, but without directmode nor Java, only minlib and C/C++. I stick to the same coding scheme as everything I have posted in the past few weeks. And at this point, limitations imposed by no framebuffer have turned into advantages, at least to my eyes.

I have no hardware, but after timing some frames in emulator I would say between 25 and 30 fps. And there’s room for performance improvement, I guess.

AFAIK, this would be the first time it’s been done without a framebuffer.

I didn’t plan to go further with this mode 7 demo. But I agree mipmapping, background artwork, jumping/flying, ceiling and even distance fog should be easy to code. Texture interpolation and per pixel procedurals would kill framerate Imho.

Sorry, I have got many items left to strike out in my to-do list. I have already switched to my next project: a serious take on a Tetris clone. Again, it will not be the first, but I feel it needs to be done. I played that game a lot in the past, it deserves a proper port!

Fyi, this is the half right inner loop (there’s a “mirror” half left), coded in about 5 minutes and pretty similar to the one I coded for rotozoomer.

s = source, d = destination, ptr = pointer to first pixel of the (8x128)x128 8bit tileset (one row of tiles).

I tried an experimental “caching” of the tile offset from previous pixel, but it can be improved further by calculating how many consecutive pixels come from the same tile.

// Center --> Right

int xs = xs0;

int ys = ys0;

int prevcell = 0xFFFFFFFF;

int celloff = 0;

unsigned short *pd = pd0;

for( int w = ( SCREEN_WIDTH >> 1 ); w != 0; w-- ) {

int x = ( xs >> FIXED_MATH );

int y = ( ys >> FIXED_MATH );

int xc = ( x >> NBITS_TEX_POS ) & ( ( 1 << NBITS_CELL_POS ) - 1 );

int yc = ( y >> NBITS_TEX_POS ) & ( ( 1 << NBITS_CELL_POS ) - 1 );

int xt = x & ( ( 1 << NBITS_TEX_POS ) - 1 );

int yt = y & ( ( 1 << NBITS_TEX_POS ) - 1 );

int curcell = xc + ( yc << NBITS_CELL_POS );

if( prevcell != curcell ) {

prevcell = curcell;

celloff = ( (int) m_tmap[ curcell ] ) << NBITS_TEX_POS;

}

*(pd++) = pal[ ptr[ celloff + xt + ( yt << ( NBITS_TEX_POS + NBITS_TILESET_SIZE ) ) ] ];

xs += dxs;

ys += dys;

}

And the first time with the full resolution also. I used the lowres screen mode.

No big surprise, mode 7 texture mapping is well suited to a linebuffer, as it’s fully secuential and you don’t need to jump forward, nor skip lines nor revisit previous ones.

The hard time for me will be doing a “doom” clone without a full framebuffer: either I get the walls textured (vertical sweep) or the floor (horizontal sweep), but not both.  Maybe the right solution is “tiled rendering” ???

Maybe the right solution is “tiled rendering” ???

You want image order rendering then, which mode7 is. Maybe want to try full 2D raycasting?  That would be really interesting to see (I’ve only seen very slow unoptimized floating point port so far). I think using only a few simple shapes like spheres and planes could allow even interactive framerates.

That would be really interesting to see (I’ve only seen very slow unoptimized floating point port so far). I think using only a few simple shapes like spheres and planes could allow even interactive framerates.

In my raycasting lib I render everything vertically, even textured floor. You could use that library to make a demo that doesn’t use framebuffer.

Not sure if tiled rendering would solve the lack of framebuffer, I think it’s used just to reduce the amount of resources needed to render the frame, but am not sure.

This is okay, it’s just a shame you don’t share your full source with a license – someone else could try to take it further, use it for a game, improve it etc.

Challenge accepted. But after I take the tetris game to a WIP playable demo.

What do you mean by full 2D raycasting? 2.5D raycasting? Or 3D raycasting?

If it’s 2.5D, then I’m not sure what kind of engine you have in mind, to name a few:

- Wolfenstein 3d style raycasting: flat levels with walls at right angles, no texture mapping on the floor

- Doom, I don’t think it uses raycasting in its Doom engine, but allows for walls and floors at any angle or height, though two traversable areas cannot be on top of each other (no room over room), applies some simple lighting, allows looking up/down via “y-shearing”, uses sprites for objects and uses binary space partitioning to quickly select the portion of a level that the player could see at a given time.

- Duke Nukem 3d: definitely not a raycasting engine. The Build Engine it is based upon is quite interesting: allows sloped floors and ceilings, usage of sectors instead of BSP, sectors and portals allows for movable walls and mirrors, parallaxed skies in outdoor environments, dynamic world through manipulation of sector attributes on-the-fly and usage of “sector effectors” to trigger changes, sector overlapping to a certain degree, and even voxel support for objects.

If you’re talking about a full 3D engine, then we should talk about shading: flat? gouraud shading? texture mapping without lighting? perspective correct texture mapping or just affine transformation? fading with distance or fog?

Also, what kind of object hierarchy has to be used to manage occlussion/visibility/collision?

About image order: my parallax scroll demo uses image order. Not every parallax engine allows for layers overlapping… take a look at the clouds… they overlap a bit and are being drawn back to front to show properly.

I second this sentiment.

Sharing the source code with the userbase gives other people a chance to expand on the work and produce something more.

Keeping it closed is likely to lead to this demo being forgotten about and buried amongst other inactive threads.

Go careful when trying to make a Tetris clone,

The Tetris Company LLC enjoys fiercely killing off Tetris clones whenever they can.

The last well documented case was 7 years ago, but that’s not to say they aren’t still doing it,

so either make sure to avoid their attention or ensure that your version is significantly different.

What I mean by 2D is 2D screen and 3D space, as opposed to 1D raycasting in 2D space (which is what I’ve done with my library). I.e. you render a 3D image by casting a ray for every pixel (not just column) on the screen. If you then add secondary and shadow rays, it becomes ray tracing, which you can try too, but the first step is to do ray casting (without resursively cast rays).

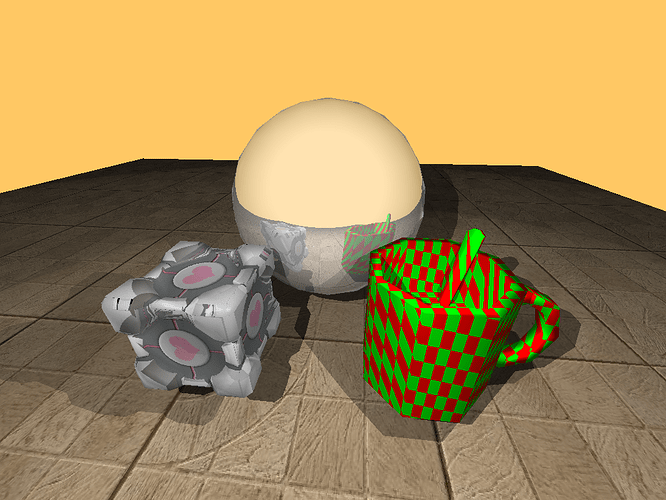

The basic implementation is actually pretty simple (find the intersection of line with given object by solving an equation, very simple e.g. for spheres), but will become more interesting if you don’t use floats and will want to optimize it – if you have static (non animated) environment and a lot of objects, you can use accelerating structures such as quad trees for culling and fast intersections, but I wouldn’t do this. I’d only try to render a few spheres and planes, maybe cylinders and cubes, … Bounding spheres for non-spherical objects are a simple technique that could be tried.

If this is too slow for realtime, you can use tricks, e.g. first quickly render a small resolution (subsampled) frame and then if camera didn’t move keep casting additional rays and improving resolution. Actually I think – if you don’t use framebuffer – there might be a more elegant way:

Don’t cast rays strictly from top left to bottom right by single pixels, but keep jumping by some offset – e.g. you first draw the top left pixel of the screen, then jump 4 pixels to the right and so on… when you get to the end of the screen, go to the second pixel from top left and repeat this. So the order of pixels could be e.g.:

035

714

682

Shading is the next step – again, it’s not difficult but performance is an issue. Start with flat shading and distance fog (cheap), then try Goraud, then Phong etc. For simplicity I’d only have one global directional light. You can continue with textures (procedural ones are especially useful here – a simple checkerboard can be as cheap as sampling a bitmap texture – actually it will be cheaper because for a 2D texture you’ll need to do extra computation to get UV coords from 3D space coords of the intersection). The advantage of raycasting(tracing) is that you don’t need to do texture coordinate correction, it is correct by default.

Doom and Duke Nukem engines aren’t raycasting, they’re BSP renderers (Doom uses sectors too) – the Build engine is just more advanced and uses additional tricks. No one has done that on Pokitto so far – you can add it to your list (it’s on mine as well). The main reason I haven’t started it is that you need to create extra tools for creating and compiling maps – I preferred raycasting because you can edit the maps in the source code and don’t have to compile them.

I see, you mean real time raytracing (first ray only): https://en.m.wikipedia.org/wiki/Ray_tracing_(graphics)#In_real_time

I have played with RTR some years ago using GPU shaders (even on an iPhone 3GS and also on Android) not only on spheres but also volume rendering of isosurfaces / implicit surfaces:

https://en.m.wikipedia.org/wiki/Isosurface

https://en.m.wikipedia.org/wiki/Implicit_surface

So, it’s ok, but I’m not sure if it’s going to be slow to a crawl on a MCU without floating point support.

Well whether it’s going to be real time depends what FPS you reach  Even offline renderer is useful, you could e.g. render environments for games like Myst at runtime, without having to store the images anywhere.

Even offline renderer is useful, you could e.g. render environments for games like Myst at runtime, without having to store the images anywhere.

I’ve once written a ray tracer too, it was only SW and worked on polygonal models:

It was actually a distributed ray tracer, so it could do things like depth of field, matte glass, soft shadows etc. This is also another extension that’s easy to add once you have basic ray casting implemented.

Casting rays has some advantages over the traditional rasterization and allows to do cool things.

Practically any floating point math is replaceable with fixed point, I think there should be no problem and I see you can do it from the snippet you’ve posted. Then you might try to apply the tricks and optimize the scene itself so that it could really be interactive.

Although I think you can’t go for full native resolution this time, more like the 110x88.

Before you start this, I’d like to suggest you consider making it a free/open-source library, and independent of any platform, as I do with my libraries (small3dlib, raycastlib) – this way your code doesn’t end up wasted and people don’t have to reimplement it later. But it’s only a suggestion of course, just think about it

I also wrote my own triangle mesh raytracer when I was young but did not implement many of the features your raytracer has. Good work!

I suppose it’s time to see if I can still code a basic sphere raytracer from scratch and by heart in less than 10 minutes.

I found an interesting slideshow that explains raytracing and its benefits (not including photorrealism in next gen games  ): https://my.eng.utah.edu/~cs6965/papers/a1-slusallek.pdf

): https://my.eng.utah.edu/~cs6965/papers/a1-slusallek.pdf

Realtime raytracing focus on interactive frame rates, losing some accuracy and some time costly features in favor of speed of rendering. You have already pointed out some “shortcuts” as fancy sampling, removing secondary rays and limiting scene complexity. There are others: environment mapping, shadow maps, normal maps, …

That would be just awesome for a pseudo 3d golf game on the pokitto

Mode 7 graphics should be enough for a pseudo 3d golf game. Unless you want to see detailed grass, realistic hair reflections, the rays of sun light refracting through the water drops coming from the irrigation system, realistic water reflections and lens flares

This would be a motivation for adding the hill rendering to mode7 as I mentioned.

Well this could enable us to have rendered trees, hills, bunkers, and being slow wouldn’t be an issue since it’s a golf game. And like the old PC golf games you wouldn’t show anything before it’s all rendered.

Sold! When can you deliver?